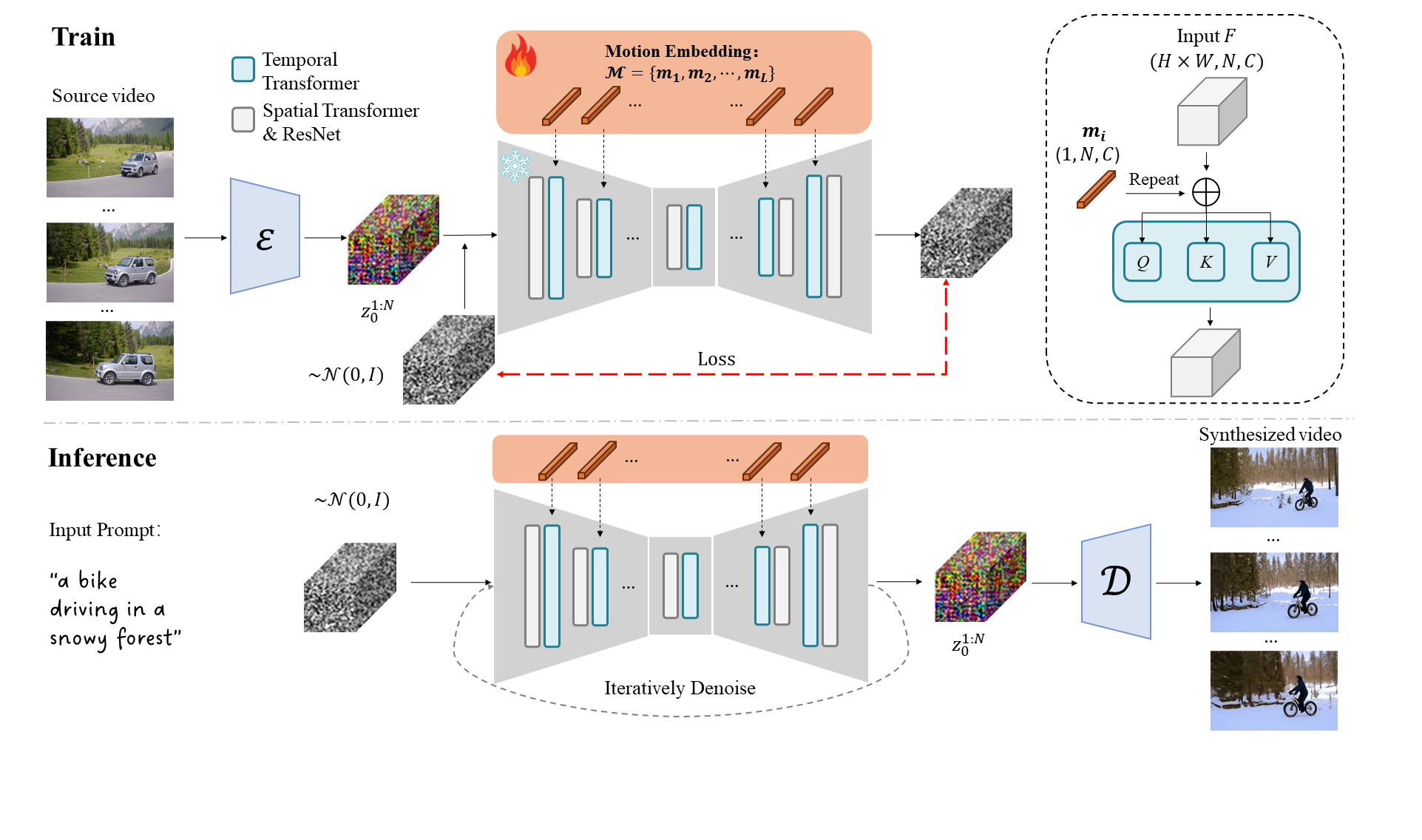

Motion Embeddings

In this research, we propose Motion Embeddings, a set of explicit, temporally coherent one-dimensional embeddings derived from a given video. This representation enables the customization of video motion with remarkable efficiency, requiring less than 0.5 million parameters and less than 10 minutes of training time.